GDC 2024 Sessions Are Now on GDC Vault

The 2024 Game Developers Conference has arrived on GDC Vault, giving subscribers access to these and thousands more game-changing GDC videos.

The 2024 Game Developers Conference has arrived on GDC Vault, giving subscribers access to these and thousands more game-changing GDC videos.

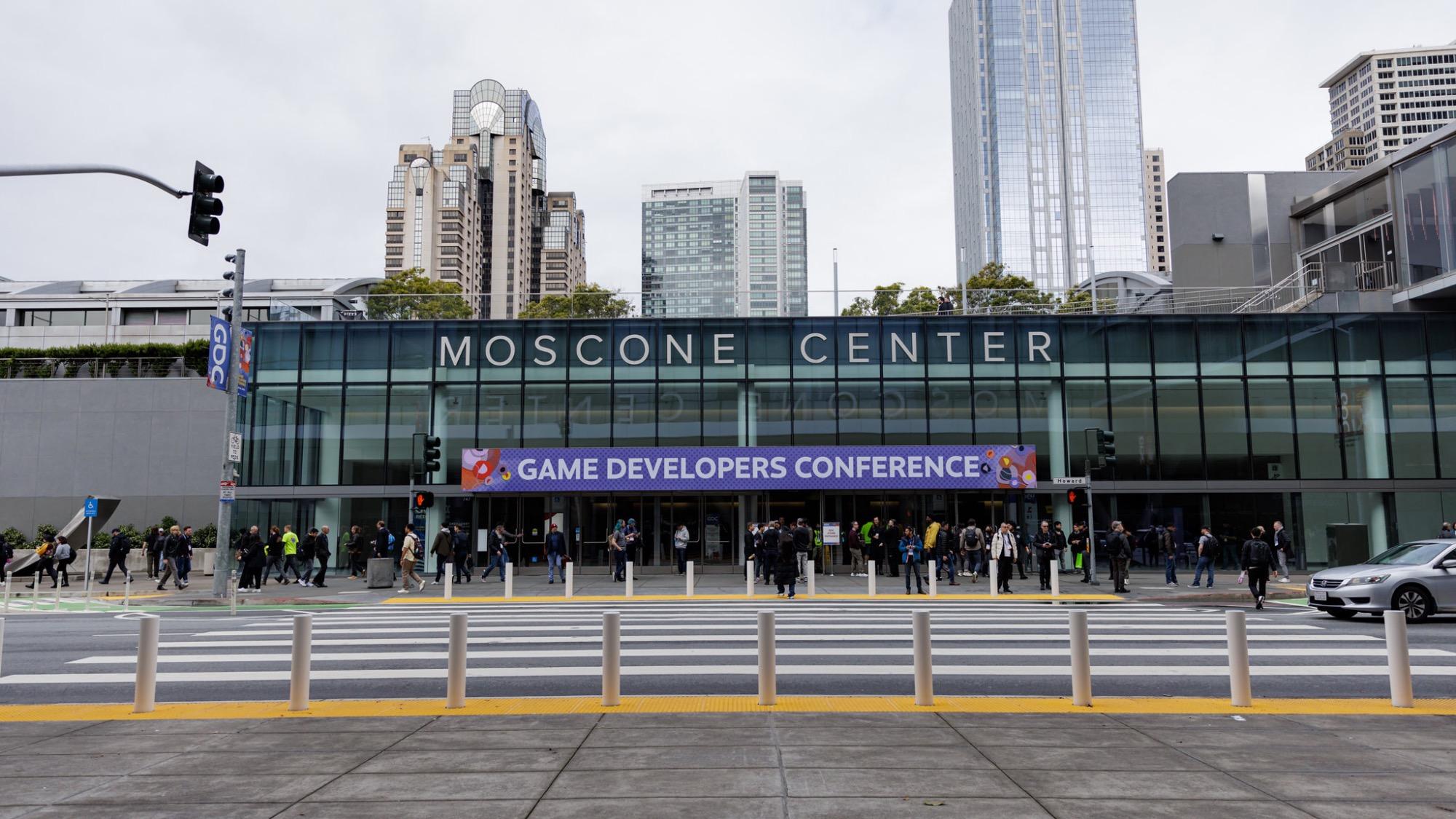

It's the professional game developer event of the year, and what a year it was. The 2024 Game Developers Conference hosted nearly 30,000 registered attendees for five full days of inspiring sessions and networking opportunities with peers and leaders in the games industry—and we're already gearing up for 2025.

We can hardly believe it ourselves: We've reached the final day of the 2024 Game Developers Conference. It's been amazing spending this time with the global game dev community, and we're thrilled for what today has in store.

We've reached Day 4 of the 2024 Game Developers Conference! If you’re feeling a bit tired from last night’s amazing IGF and GDCA ceremony, we’ve got what you need to make it a fantastic Thursday at the event.

Baldur’s Gate 3, the critically acclaimed role-playing game by Larian Studios, was presented with the Game of the Year award during the 24th Annual Game Developers Choice Awards on Wednesday—taking home four awards over the course of the evening.

Venba is a narrative cooking game in which players experience life as an Indian mom who has immigrated to Canada with her family in the 1980s. Players cook various dishes and restore lost recipes, hold branching conversations and explore in this story about family, love, loss and more.

The stage is set for another stellar day of talks, roundtables, networking, and more at the 2024 Game Developers Conference. The GDC Expo opens today, core sessions have begun, and the Main Stage is set for a live concert—along with the 2024 IGF Awards and Game Developers Choice Awards!

It's time for Day 2 of the 2024 Game Developers Conference! Tuesday features even more summits, sessions, sponsored summits, and workshops for our attendees.

It's the week we've all been waiting for: The 2024 Game Developers Conference has returned to the Moscone Center. We're kicking things off with an incredible first day of summits, talks, sponsored summits, and events.

It's almost time for the Game Developers Choice Awards and 25th annual Independent Games Festival Awards, happening at GDC 2024 in San Francisco on March 20. Here's how you can join us—whether in-person or watching the ceremony from home.

Game Maker’s Sketchbook, the annual celebration of art and artists in games, has revealed the official selections for its third year.

For the past few years, I've been honored to serve as Event Director of the Game Developers Conference. I love the community we've built, the organizations we've partnered with, and the city we call home. Bringing game developers together every year fills me with joy and pride.

We've put together this list of recommended GDC 2024 restaurants, along with a few bars with non-alcoholic options for after-hours get togethers.

Following a standing-room only show last year, Extra Lives: The Live Experience is returning to GDC 2024. This time, they're performing the exciting Live Audience RPG on the Main Stage, before the 2024 IGF Awards and Game Developers Choice Awards!

The 2024 Game Developers Conference is coming up, and now is the time to familize yourself with the rules we have put in place for the event. Please take a moment to familiarize yourself with the GDC Code of Conduct before attending.